728x90

반응형

Llama3.1로 Github PR AI 코드 리뷰 하기

Llama3.1 설치 및 실행

llama3.1 설치의 경우 구글에서 검색했을때 어마어마하게 많이 나와서 아무거나 확인해보면 금방 세팅 가능하다.

아래 코드 구현 및 실행 전에 먼저 세팅을 하자.

AI Code Review 코드 작성하기

Python과 설치한 Llama3.1 model을 사용해서 AI로 코드 리뷰하는 코드를 작성해보자.우선 데모를 목적으로 간단하게 구현을 하였으며 이를 활용한 API 라든지 개발을 통해서 자동화도 가능하다.

- CI/CD 파이프라인 통합: GitHub Action을 통해 코드 리뷰를 자동화하여 개발 효율성을 높일 수 있습니다.

- AI 기반 코드 품질 향상: Llama 3.1 모델을 활용하여 PR의 코드 품질을 자동으로 평가하고, 리뷰 시간을 단축할 수 있습니다.

Dependency Package 설치

우선 구현에 필요한 Python 패키지로 설치하자.

anyio==4.4.0

certifi==2024.7.4

cffi==1.16.0

charset-normalizer==3.3.2

colorful-print==0.1.0

cryptography==43.0.0

Deprecated==1.2.14

gitdb==4.0.11

GitPython==3.1.43

h11==0.14.0

httpcore==1.0.5

httpx==0.27.0

idna==3.7

ollama==0.3.1

pycparser==2.22

PyGithub==2.3.0

PyJWT==2.9.0

PyNaCl==1.5.0

requests==2.32.3

smmap==5.0.1

sniffio==1.3.1

typing_extensions==4.12.2

unidiff==0.7.5

urllib3==2.2.2

wrapt==1.16.0Application Config 및 Util 함수 구현

앱에서 사용할 설정값들 세팅값과 유틸 함수들이다.

from functools import lru_cache

class _Config:

github_api = "https://api.github.com"

github_api_version = "2022-11-28"

github_token = "TOKEN"

repo_path = "{OWNER}/{REPO_NAME}"

dir_main_code = "./repo/{REPO_NAME}/"

clone_url = "{CLONE_URL}"

@lru_cache

def _get_config():

return _Config()

config = _get_config()

import re

from colorful_print import color

def read_file_to_str(path: str) -> str:

with open(path, 'r') as f:

return f.read()

def get_start_line_from_pr_diff(diff: str):

matched = re.search(r"@@ -\d+,\d+ \+(\d+),\d+ @@", diff)

if matched:

return int(matched.group(1))

else:

print("failed to get_start_line")

return None

def colorful_dispatcher(c: str, msg: str, *args, **kwargs):

dispatch = getattr(color, c)

dispatch(msg, *args, **kwargs)

def red(msg: str, *args, **kwargs):

colorful_dispatcher('red', msg, *args, **kwargs)

def green(msg: str, *args, **kwargs):

colorful_dispatcher('green', msg, *args, **kwargs)

def yellow(msg: str, *args, **kwargs):

colorful_dispatcher('yellow', msg, *args, **kwargs)

def blue(msg: str, *args, **kwargs):

colorful_dispatcher('blue', msg, *args, **kwargs)

def magenta(msg: str, *args, **kwargs):

colorful_dispatcher('magenta', msg, *args, **kwargs)

def cyan(msg: str, *args, **kwargs):

colorful_dispatcher('cyan', msg, *args, **kwargs)Github RestAPI 요청 함수 구현

Github PR 정보에 대해 가져오거나 코멘트 생성 등을 위한 API 구현.

import os

from typing import Union

import git

import requests

from app.config import config

from app.utils import yellow, blue, red

def clone_or_pull(clone_url: str, repo_dir: str):

if os.path.exists(repo_dir):

blue("GIT PULL"), git.Repo(repo_dir).remotes.origin.pull()

else:

blue("GIT CLONE"), git.Repo.clone_from(clone_url, repo_dir)

def get_pr(pr_number: int) -> Union[dict, None]:

resp = requests.get(

url=f"{config.github_api}/repos/{config.github_repo_path}/pulls/{pr_number}",

headers={

"Authorization": f"Bearer {config.github_token}",

"X-GitHub-Api-Version": f"{config.github_api_version}",

"Accept": "application/vnd.github+json",

}

)

try:

resp.raise_for_status()

return resp.json()

except requests.HTTPError as e:

red("failed to get_pr_files()", e)

return None

def get_pr_changed_files(pr_number: int) -> Union[dict, None]:

resp = requests.get(

url=f"{config.github_api}/repos/{config.github_repo_path}/pulls/{pr_number}/files",

headers={

"Authorization": f"Bearer {config.github_token}",

"X-GitHub-Api-Version": f"{config.github_api_version}",

"Accept": "application/vnd.github+json",

}

)

try:

resp.raise_for_status()

return resp.json()

except requests.HTTPError as e:

red("failed to get_pr_files()", e)

return None

def get_pr_diff(pr_number):

# https://docs.github.com/en/rest/pulls/pulls?apiVersion=2022-11-28#get-a-pull-request

# curl -H "Accept: application/vnd.github.v3.diff" https://<personal access token>:x-oauth-basic@api.github.com/repos/<org>/<repo>/pulls/<pull request>

resp = requests.get(

url=f"https://{config.github_token}:x-oauth-basic@api.github.com/repos/{config.github_repo_path}/pulls/{pr_number}",

headers={"Accept": "application/vnd.github.v3.diff"},

# headers={"Accept": "application/vnd.github.v3.patch"},

)

try:

resp.raise_for_status()

return resp.text

except requests.HTTPError as e:

print(resp.status_code, e)

return

def create_review_comment(

pr_number: int,

comment_content: str,

last_commit_sha: str,

filename: str,

start_line: int,

end_line: int

) -> Union[dict, None]:

if not comment_content:

return None

resp = requests.post(

url=f"{config.github_api}/repos/{config.github_repo_path}/pulls/{pr_number}/comments",

headers={

"Authorization": f"Bearer {config.github_token}",

"X-GitHub-Api-Version": f"{config.github_api_version}",

"Accept": "application/vnd.github+json",

},

json={

"body": comment_content,

"commit_id": last_commit_sha,

"path": filename,

"start_line": start_line,

"line": end_line,

"side": "RIGHT",

},

)

try:

resp.raise_for_status()

return resp.json()

except requests.HTTPError as e:

red(f"failed to create_review_comment()\n\t{e}\n\t{filename}\t {start_line}\t {end_line}\t {last_commit_sha}")

return None

def show_rate_limit():

resp = requests.get(

url=f"{config.github_api}/rate_limit",

headers={

"Authorization": f"Bearer {config.github_token}",

"Accept": "application/vnd.github+json",

}

)

try:

resp.raise_for_status()

rate_limits = resp.json()

blue("RATE-LIMITS:", rate_limits["resources"]["core"])

except requests.HTTPError as e:

red("failed to get_rate_limit()", e)Model Client 구현

클라이언트에서 모델과 통신을 위한 모델 클라이언트 구현.

from abc import abstractmethod

from typing import Any, Union

from ollama import Client

ModelClientType = Union[Client]

class ModelClient:

def __init__(self):

self.client = self.get_client()

@abstractmethod

def get_client(self) -> ModelClientType:

pass

@abstractmethod

def chat(self, main_code: str, user_input: str) -> str:

passfrom ollama import Client, Message, Options

from app.ai_model.model_client import ModelClient, ModelClientType

class OllamaClient(ModelClient):

# model_name = "fitpetmall-v4",

model_name = "llama3.1"

def get_client(self) -> ModelClientType:

return Client(host="localhost:11434")

def chat(self, main_code: str, user_input: str) -> str:

stream_channel = self.client.chat(

model=self.model_name,

messages=_generate_messages(main_code, user_input),

stream=True,

keep_alive="5m",

# https://github.com/ollama/ollama/blob/main/docs/modelfile.md#valid-parameters-and-values

options=Options(

temperature=0.5,

top_k=30,

top_p=0.3,

mirostat_tau=1.0,

),

)

said = ""

for channel in stream_channel:

print(content := channel.get("message", {}).get("content", ""), end="", flush=True)

said += content

return said

def _generate_messages(main_code: str, user_input: str):

return [

Message(

role="system",

content=

f"""

# Instruction

You are a senior engineer at a FAANG company. Review the Changes based on Main Source Code and **offer a summary within 100 words**.

If the Changes is a just import statement, do not provide any summary.

# **Important Instruction**

Ensure that all answers are in KOREAN.

# Review Guidelines

- Ensure the code change functions as intended. Identify any logical errors or bugs.

- Check if the code change introduces any performance bottlenecks or inefficiencies. Suggest improvements if necessary.

- Look for any potential security vulnerabilities or issues. Provide recommendations to mitigate these risks.

- Ensure the readability and maintainability about naming conventions, code structure, and documentation.

- Check for any other potential issues or concerns. Provide guidance on how to address them.

""".strip()

),

Message(

role="user",

content=f"""

# Main Source Code (Based on this)

{main_code}.strip()

# Changes (For Review)

{user_input}.strip()

""",

)

]App Main 함수 구현

import io

import os

from unidiff import PatchSet, PatchedFile, Hunk

from app.ai_model.model_client import ModelClient

from app.ai_model.provider.ollama_client import OllamaClient

from app.api_github import get_pr, show_rate_limit, get_pr_diff, clone_or_pull, create_review_comment

from app.config import config

from app.utils import green, yellow, read_file_to_str

def app_main(pr_number: int, model_client: ModelClient):

show_rate_limit()

pr = get_pr(pr_number)

head_sha = pr["head"]["sha"]

pr_diff = get_pr_diff(pr_number)

patch_set = PatchSet(io.StringIO(pr_diff))

patched_file: PatchedFile

for patched_file in patch_set:

if patched_file.is_binary_file or patched_file.is_removed_file or not patched_file.path.endswith(".kt"):

continue

hunk: Hunk

for hunk in patched_file:

start_line = hunk.target_start + 3

end_line = start_line + hunk.added - 1

green(f"\n[REVIEW_START] {patched_file.path} (Line: {start_line} - {end_line})", bold=True, italic=True)

tc130_said = model_client.chat(

main_code=read_file_to_str(os.path.join(config.dir_main_code, patched_file.path)),

user_input="".join(hunk.target).strip()

)

if "LGTM" in tc130_said:

continue

yellow(f"\n\n[REVIEW_CREATE] {patched_file.path}\t (Line: {start_line} - {end_line})", bold=True)

create_review_comment(

pr_number=pr_number,

comment_content=tc130_said,

last_commit_sha=head_sha,

filename=patched_file.path,

start_line=start_line,

end_line=end_line

)

if __name__ == '__main__':

clone_or_pull(

clone_url=config.clone_url,

repo_dir=config.dir_main_code

)

app_main(

pr_number=2130,

model_client=OllamaClient()

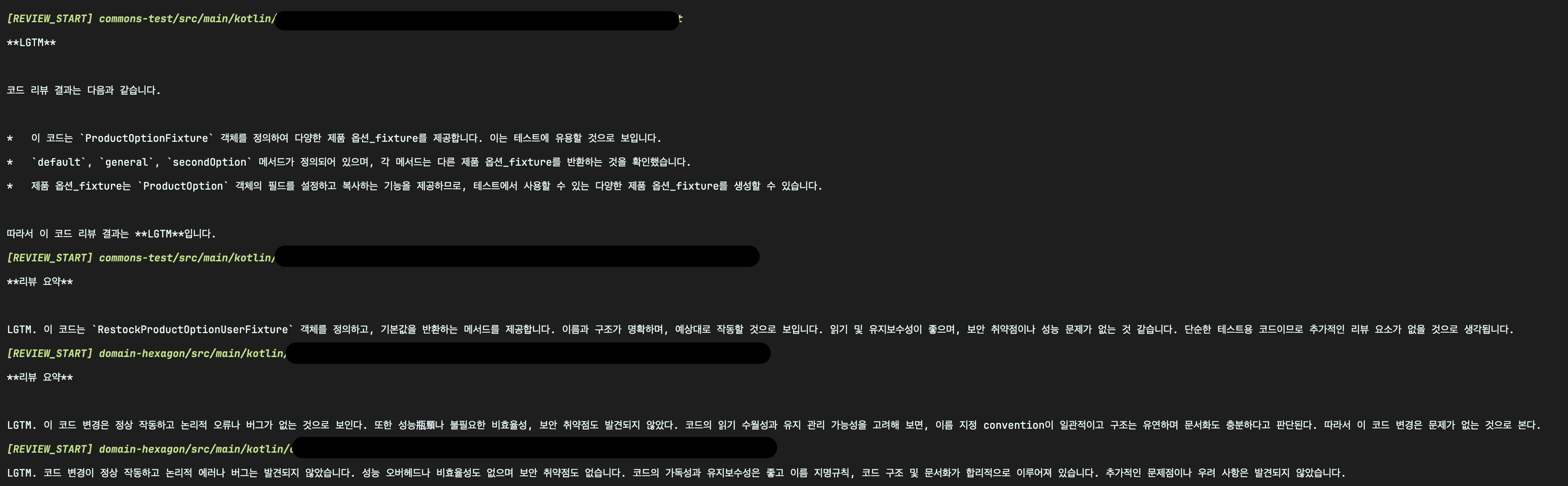

)실행 결과

728x90

반응형

'IT > AI' 카테고리의 다른 글

| [Stable Diffusion] Stable Diffusion 3.5 Text to Image 이미지 생성 (0) | 2024.11.28 |

|---|---|

| Prompt Engineering Guide: Prompting Techniques (0) | 2024.11.24 |

| Prompt Engineering Guide: LLM Arguments (0) | 2024.11.22 |

| [AI] Markdown 을 사용한 Prompts 작성 방법 (0) | 2024.08.06 |

| [AI] stable-code-3b 기본적인 사용 가이드 (AI coding) (0) | 2024.01.26 |